Practical AI for developers, part 1: intro, policies, and rewards

Learn how to confidently make AI apps without becoming an AI researcher. Part 1 introduces LLMs and how they make decisions.

As of writing, it’s way still way too hard to wrap your head around what is going on with AI—specifically LLMs—if you’re a programmer/developer/software engineer who wants to use AI in your products. In fact, we’re in dire need of paradigms to bridge the gap between high-level APIs like OpenAI and Replicate’s web endpoints for fine tuning models and Hugging Face’s libraries.

App developers need new paradigms for AI programming

The year is 2023, but we’re in a 2010ish moment when it comes to AI and app development. 2010 is when Backbone.js, the groundbreaking web framework, was first released. From that moment forward, we saw a rapid evolution of web frameworks but more importantly programming paradigms. Functional reactive programming (e.g., React, Redux) eventually …

Before we can make those paradigms as a community, though, we need better educational materials for app developers to understand what’s going on in the large language models (LLMs) that we use. Personally, I think acclaimed courses like Andrej Karpathy’s YouTube series and fast.ai still require too much time to go through.

I’m going to give it my best shot on this blog, explaining what I’ve learned about how AI works with fellow developers in mind. I assume that you’re already a programmer and that you have some minimal experience with LLMs, even if it’s just having played around with ChatGPT.

By the end of this series, you should have enough of a foundation in current AI systems, particularly LLMs and reinforcement learning (RL), to confidently be able to use higher-level libraries like Hugging Face’s Transformer Reinforcement Learning (TRL) to create and deploy AI-enabled apps.

Key terms will be bolded and italicized.

Note: I’m not an AI researcher, I’m mostly writing this stuff as I learn it, and I’m mostly using ChatGPT to tutor myself. If you find something wrong, please let me know in the comments! Keep in mind that I’m simplifying certain concepts to keep it appealing for developers.

Introduction

What is an AI?

The number one thing that you need to know about any AI system is that it’s a prediction machine.

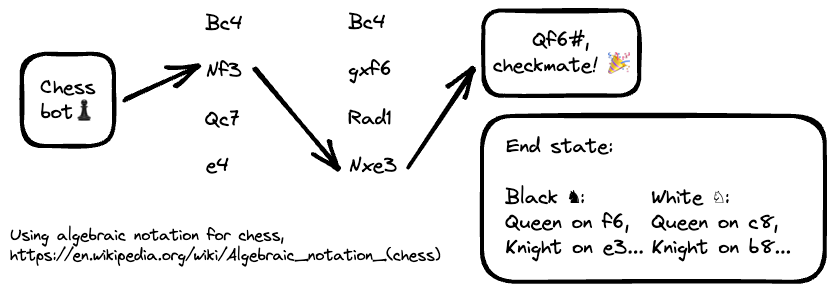

The entire purpose of AI is to predict what comes next. For example, an AI chess player predicts the next move. More abstractly, we can say that an AI like ChatGPT predicts the next word in a conversation. An image-generating AI like Midjourney predicts what’s the next pixel as it constructs an image.

For clarity, the community uses these technical terms when describing this prediction process:

The “AI” is called an agent. ChatGPT is an agent.

The thing being predicted is an action. Agents generate actions, one after the other.

This terminology is intuitive for agents that visibly do things, like play a game of chess: each move is an action. But more abstractly, you can think of any prediction as an action. Each word generated by ChatGPT and every pixel created by Midjourney is a “move.”

Agents always move toward a goal, and this is very important. No matter how human-like an agent appears, the most fundamental distinction between us and it is that every action leads toward a certain goal.

Put another way, the purpose of an agent is to execute tasks that can have a successful end state:

A chess-playing agent’s task is to play a game of chess that results in checkmate.

An image-generating agent’s task is to create an image that represents a prompt.

A chatbot’s task is to…hmm, does it have a task? Does it have an end state? There isn’t an actual end state since you can, in theory, have an infinitely long conversation with a chatbot, so we say that the chatbot has a continuous task. But for the purposes of teaching a chatbot how to speak, we feed it conversations of limited length that in fact do end, forming a kind of imaginary end state. More on this later.

The opposite of a continuous task is an episodic task. You can think of a single game of chess as an episode for a chess bot. It has a logical end with a well-defined successful end state.

One last foundational piece: just as task has a successful end state, every agent has a certain state at any given moment. State represents the agent’s environment, position, or general orientation:

A chess bot’s state in the middle of a game includes the current position of all the pieces on the board.

An image-generating agent’s state in the middle of creating an image includes all the pixels it’s painted so far.

A chatbot’s state in the middle of a conversation includes all of the words written so far in the conversation, both by it and the user.

Put in a more rigorous way, state includes all the data an agent needs to make the next action. It represents all progress that an agent has made so far in completing its task. AI agents, like humans, take their next action based on everything that’s happened up until that point.

At this point, we can update our answer to “what is an AI”:

An agent, commonly referred to as an AI, is a program that generates actions in order to complete a task. Specifically, it generates or “predicts” one action at a time with the aim of achieving a particular goal.

If the agent is working on an episodic task, then the task has a successful end state. Reaching that end state means that the agent as achieved its goal.

There are also continuous tasks, meaning tasks with no predefined end (we’re not sure yet how this fits into our mental model of AI).

At any given moment, an agent has a particular state. Each state represents all the info about the agent’s environment and its orientation in that environment—which often includes or implies info about every action taken up to the present—needed to take the next action.

But given a certain state, how does an agent figure out what the next action is?

Policies and rewards

Agents determine the next action using a policy, which means “a strategy for completing a task.”

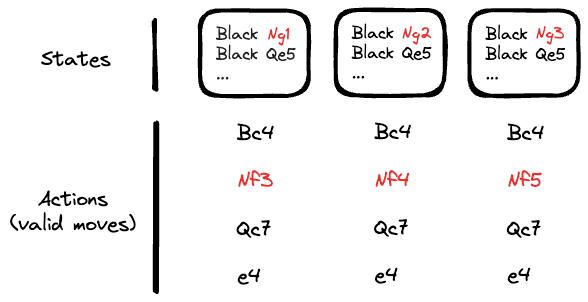

More formally, we can define a policy as a mapping between states and actions:

Given a certain state on the chess board where some of the pawns are out, a knight is on a certain square, white’s bishop is threatening black’s queen, etc., the chess bot should move its rook to D2 to get closer to checkmate.

Given a certain set of pixels with certain colors that forms a fuzzy image that kind of looks like a koala, the next pixel generated by Midjourney should be

rgb(233, 242, 79)atx = 27, y = 139to get closer to the finished koala.Given a certain set of words that comprises a conversation up until this point, a chatbot should say the word “fries” next to get closer to…more on this later.

As humans, we intuitively know what to do in a given moment. You could say that we’re constantly updating “policies” in our head and acting on them—not that the brain actually works that way, but we can pretend for the sake of argument. Our reasoning is opaque and ranges from “this is rational” to “this feels right” to “I dunno.”

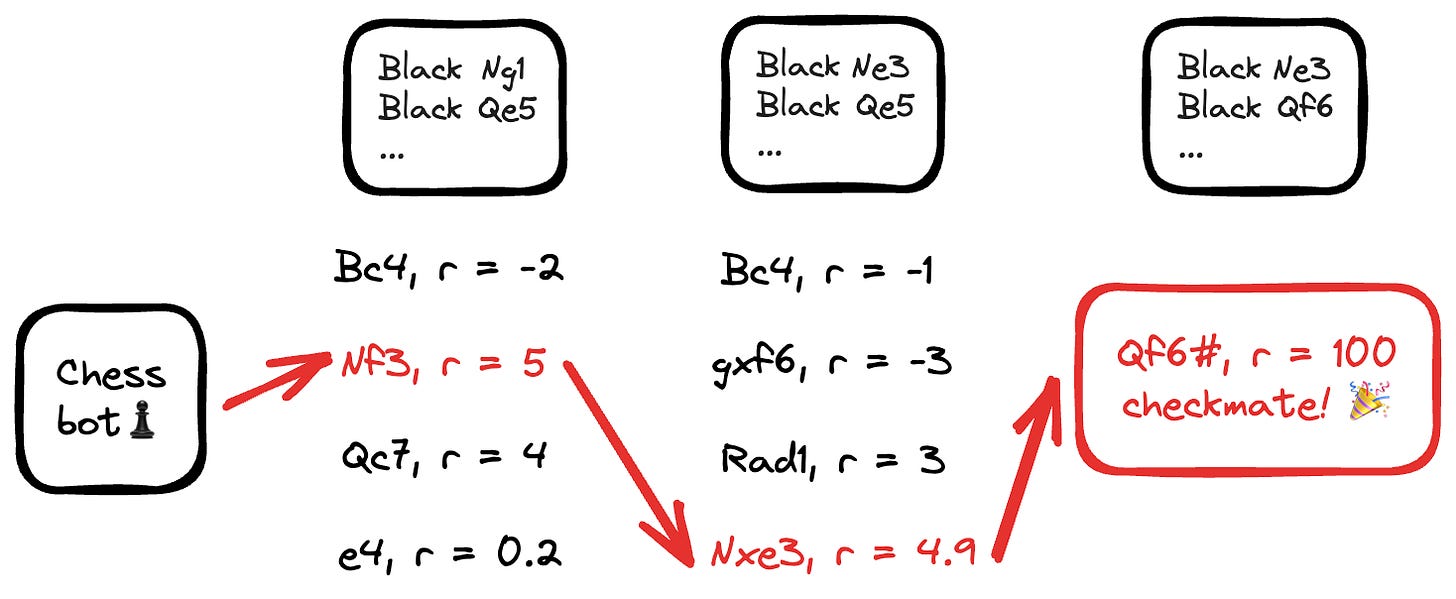

AI agents in contrast have a concrete measure of why certain actions are better than others given a certain state. That measure is called reward, which is stored as a number (let’s label reward as r in the diagram below):

You can think of reward as points. The agent gets more points if it takes an action that brings it closer to its goal and it gets less points for actions that take it further away.

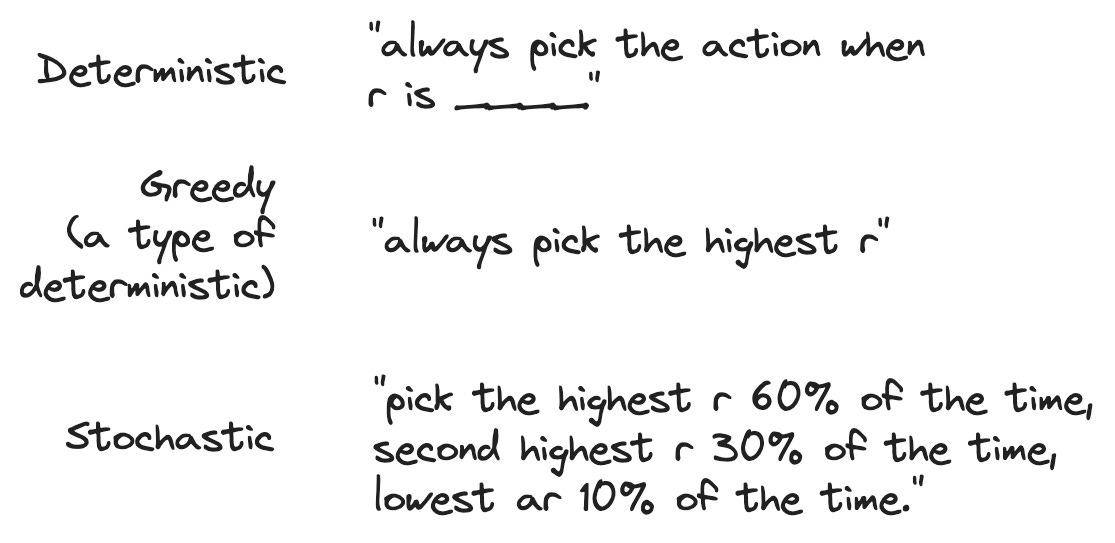

Policies map states to actions in a one-to-many relationship. You can take any number of actions at any given point, and some are better and some are worse, just like how there are great moves, OK moves, and crappy moves on a chessboard. The better moves have greater rewards associated with them and the worse ones have lesser, even negative, rewards. A policy that always takes the next action that has the greatest reward is called greedy.

In its simplest form, a policy can be stored as a table, which you can generalize as a function: given a certain state, tell me how much reward I get for every next action that I could potentially take. A greedy policy, which is a kind of deterministic policy, then tells the agent to take the action with the highest reward. It’s like a chess player who only knows one way to play.

In contrast, we can also have policies that are more nuanced, perhaps ones that intentionally select actions with lower rewards from time to time. Stochastic policies, for example, choose an action based on a probability. Perhaps the policy is programmed to pick the action with the highest reward only 60% of the time. The main reason you would want to do this is to introduce variety—into the chess bot’s strategy, into the image generator’s painting, and into the chatbot’s conversation.

Regardless of whether our agent uses a deterministic or a stochastic policy, it fundamentally decides on a course of action based on reward. The only difference is how the policy relates to the reward (always go for the highest reward? Sometimes go for the highest reward?)

But how do we know how much reward is associated with each action for every given state? Stay tuned for part 2, where we delve into how AI systems learn rewards.